Safely and Securely Back Up Your Virtual Machines on Someone Else's Computer

In healthcare, it's critical that IT professionals have a solid backup game. The workflow at job.current has undergone several iterations of backup strategies, and our offsite backup plan has never been as cohesive as I'd like it to be. Our infrastructure has undergone many changes over the last two years, and upon realizing that our offsite backup strategy had gaps, I escalated the priority of closing those gaps to SEV1.

Prior to Windows 7's demise, we were running several Citrix XenServers which served up a Win 7 VDI grid as well as infrastructure servers (LDAP, Print Servers, File Servers, app servers), and one legacy VMWare ESXi server (more of the same minus the VDIs). Each of our servers were running on identical 2u SuperMicro whitebox servers with 24 cores of Intel Xeon CPU power, 192GB of memory, and a RAID-6 array of 2tb spinners. As we phased out our VDI infrastructure, we were looking to also move away from XenServer completely, as Citrix had unfavorably changed their licensing model. After thorough testing to identify a viable path to migrating our Xen VMs, we underwent and completed the project to completely phase our our XenServer grid in favor of a balanced ProxMox and Microsoft Hyper-V infrastructure over the course of a few weeks with minimal downtime.

Surely as part of this overhaul, we spent a healthy wad of cash to buy all new hardware, right? Alas, as dutiful stewards of a tight small-org healthcare IT budget, I'm afraid we did not. However, any IT professional worth their salt will tell you that you can breathe new life into a PC by swapping out a spinning disk for solid state storage, and this old adage holds true for servers. The servers were re-provisioned into three ProxMox boxes and three Hyper-V boxes. In addition, we have two SuperMicro boxes with more modest processors running TrueNas.

My initial plan with ProxMox was to run the OS only on a cheap local SSD, and for the VMs, utilizing a 10G fiber NFS share to each of the TrueNas boxes with an SSD and spinning disk pools. I was hoping this would run great, and it only ran okay. There was noticeable lag, and sometimes the VMs would go offline, and despite many settings tweaks, it just wasn't a good experience. We added new local SSD pools to each of the ProxMox servers on which to run VMs, and VMs run smoothly using this configuration. For migration and backup purposes, the NFS backend to TrueNas works great. Having learned this lesson, we built our Hyper-V servers with local flash storage.

The network is segmented such that our TrueNas boxes are intentionally not easily accessed by our primary network. ProxMox has built-in functionality for backing up to an NFS share, and we're utilizing this, but I didn't have a great way to send these backups offsite. I found a very slick video for backing up from ProxMox to an S3 bucket, and I as I started down the path of hooking ProxMox to my S3 Storage Bucket on Linode, I began to recall seeing some cloud credentials settings on TrueNas and wondered if this would be the better option. And sure enough, it's built right in to TrueNas, I just needed to configure it in a different place, rather than on all three ProxMox boxes.

If you're reading this, perhaps you are looking to hook your TrueNas infrastructure to cloud storage. Follow the steps below to beef up your backup game:

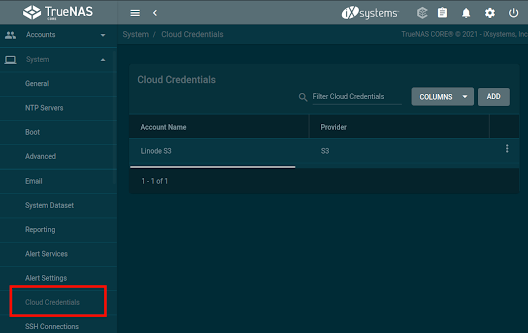

After creating your bucket(s) on your cloud provider of choice, make note of your access keys and navigate to Cloud Credentials on TrueNas:

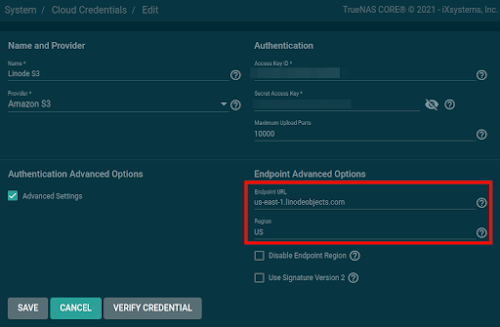

Enter your Access Key ID and Secret Access Key provided to you by your hosting provider. If you're using an S3-capable provider other than AWS, as I am, you'll need to go into Advanced Settings to specify the Endpoint URL. For Linode, it looks like this (adjust datacenter as necessary):

After you've configured your cloud credentials, go to Cloud Sync tasks:

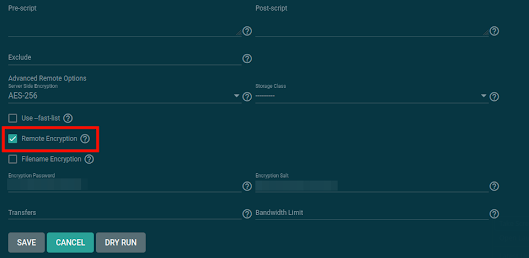

Provide a description for the task and choose the directory you want to back up. The options are mostly self-explanatory, but some of them will need to be changed:

Comments

Post a Comment

"What you type into a comment box on the Internet

echoes in eternity."

- Gerald Ford